Building AI systems that interact and connect with your data often begins with a significant challenge: the absence of an initial training set with ground truth responses to user queries. This difficulty is compounded by the following:

- 💰 Building a ground truth dataset requires substantial cost and time investments, as it often involves manual labeling, validation, and continuous updates.

- ❓ You may not even know what questions users or stakeholders will ask today, let alone in the future.

- 📈 Data sources are often transient, and subject to change over time - what is relevant today may not be relevant tomorrow.

These factors can undermine or render conventional evaluation strategies based on static, labeled datasets impractical.

However, this does not mean building a reliable and trustworthy AI system is out of reach - or that you should simply fly blind. Leveraging large language model (LLM)-based evaluations can offer a flexible and robust path forward, helping to democratize the adoption of AI for all.

In this blog post, we will explore a retrieval-augmented generation (RAG) case study based on the Finance and Prosperity 2024 report by the World Bank. This report contains a wealth of information on global economic trends, policy recommendations, and financial strategies. Along the way, we will introduce LLM-based evaluations and demonstrate how they can be used in practice to optimize AI systems and enable dynamic, on-the-fly evaluations to support informed decision-making.

#Case Study Part 1: Can You Trust Your AI's Responses?

Let's say you have just built an AI pipeline that can retrieve information from the Finance and Prosperity 2024 report and respond to user queries.

You then feed in the following query:

Explain the impact of high nonperforming loan (NPL) ratios in EMDE banking systems.

To which your AI pipeline responds with something like:

High nonperforming loan (NPL) ratios in Emerging Markets and Developing Economies(EMDEs) can have significant and multifaceted impacts on banking systems, economic stability, and overall financial health. Here are some key points to consider:1. Capital Adequacy and Bank Resilience: While many banks in EMDEs maintain sound capital and liquidity buffers, a high NPL ratio can threaten these buffers. A reverse stress test indicates that a substantial increase in NPLs could lead to undercapitalization in a notable subset of banks, particularly in lower-income countries. For instance, around 20% of banks in lower-income EMDEs could become undercapitalized with just a 5-percentage-point increase in NPL ratios, which is a significant concern given that historical data shows that peak NPLs often double during banking crises.2. Economic Growth and Credit Availability: High NPL ratios can restrict banks' ability to lend, as they may become more risk-averse and tighten credit conditions. This can stifle economic growth, particularly in developing economies where access to credit is crucial for businesses and consumers. When banks are burdened with high levels of nonperforming loans, they may prioritize cleaning up their balance sheetsIn summary, high NPL ratios in EMDE banking systems pose significant risks to financial

While the response is compelling and detailed, you might wonder: how can you tell if the AI is actually providing a reliable response based on the provided document?

Within the context of this particular case study, unreliable responses or hallucinations could impact decision-making in critical areas. For instance, policymakers may prioritize responses to overstated risks while neglecting more pressing issues, such as improving governance or enhancing bank capitalization frameworks.

From a broader perspective, a solution to this problem is vital for the general adoption of AI systems, as unfaithful claims can undermine the credibility and perceived reliability of AI tools, potentially reducing adoption and trust for assisting with decision-making.

So what are the options for tackling this problem?

- 📄 Manual Verification: You could of course meticulously sift, page-by-page, through the document yourself to verify it. This approach is neither scalable nor an efficient use of time.

- 🔍 Retrieved Information Review: You could get your system to return the information it retrieved and used from the document to generate your response. This is a good start, but it doesn't connect the dots between the information retrieved and the response generated.

- 💡 LLM-Based Evaluations: You could use LLM-based evaluations such as Faithfulness, Answer Relevancy and Context Precision.

Let's now explore what LLM-based evaluations are, and how they can provide a qualitative and quantitative way of validating AI-generated responses.

#What Are LLM-Based Evaluation Metrics?

LLM-based evaluation metrics provide a systematic approach to assess and enhance the quality of the outputs produced by AI systems, even in the absence of a ground truth labeled dataset. Here, we focus on three key metrics: Context Precision, Answer Relevancy, and Faithfulness. These metrics use LLMs to help bridge the gaps between retrieved information, user intent, and the generated responses. Rather than conventional statistical loss functions, these metrics employ LLMs with carefully crafted prompts to perform qualitative reasoning to evaluate AI systems.

Note that there are many more LLM-based metrics out there, but these typically require a ground truth dataset to compare the generated responses against. For a more technical deep dive into the world of LLM-based evaluations for RAG systems, we recommend reading about the RAGChecker framework.

#Context Precision

Definition: Context Precision measures how well the retrieved context aligns with the user query.

How It Works:

- Analyzes each retrieved chunk for its relevance to the user query.

- Classifies each chunk as relevant or irrelevant to the user query.

- Calculates a quantitative score using the formula:

Context Precision = Number of Relevant Chunks / Total Number of Retrieved Chunks

Why It Matters: High context precision ensures that the system retrieves focused and high-quality information relevant to the user query.

#Answer Relevancy

Definition: Answer Relevancy evaluates how effectively the AI-generated response addresses the user query.

How It Works:

- Analyzes each statement in the generated response to determine its relevance to the user's original query.

- Classifies each statement as relevant or irrelevant based on whether it directly answers the user's intent and information needs.

- Calculates a quantitative score using the formula:

Answer Relevancy = Number of Relevant Statements / Total Number of Statements in Response

Why It Matters: High answer relevancy ensures that the system effectively addresses user queries, resulting in more helpful and actionable responses.

#Faithfulness

Definition: Faithfulness assesses the extent to which the generated response accurately reflects the information in the retrieved chunks.

How It Works:

- Compare each key statement in the generated response with the content in the retrieved chunks.

- Classify each statement as faithful or unfaithful based on whether it aligns with the claims, facts, and entities present in the retrieved context.

- Calculate a quantitative score using the formula:

Faithfulness = Number of Faithful Statements / Total Number of Statements in Response

Why It Matters: Faithfulness ensures that the AI-generated content is trustworthy and factually accurate, which is crucial in domains requiring high reliability (e.g., legal, finance, and medical use).

#Case Study Part 2: Evaluating Response Faithfulness

Let's now return to our earlier problem: How can we verify if an AI's response is reliably based on the provided document? We'll answer this by evaluating the faithfulness of the previous response we obtained.

To do this, we have deployed a custom faithfulness component in the RAG pipeline. The output for the same user-query and response is as follows:

Evaluation of Faithfulness:1. Capital Adequacy and Bank Resilience: - Generated Response: States that a high NPL ratio can threaten capital and liquidity buffers, with a reverse stress test indicating that around 20% of banks in lower-income EMDEs could become undercapitalized with a 5-percentage-point increase in NPL ratios. - Retrieved Chunks: Confirms that around 20% of banks in lower-income EMDEs would be undercapitalized following a 5-percentage-point increase in NPL ratios. It also mentions that most banks can handle sizable credit shocks but that a weak tail exists. - Faithfulness: Faithful. The generated response accurately reflects the information from the retrieved chunks. and the potential for credit risk shocks, they do not explicitly mention the

As you can see, the faithfulness evaluation classifies each statement made in the generated response as either faithful or unfaithful, qualitatively justifying its decision using information from the retrieved chunks. It also provides a quantitative score which can be extracted, recorded and monitored.

It turns out that the response contains information that is unfaithful to the retrieved chunks used to generate it. This particular case can be considered a partial hallucination, as it contains a mix of information that is grounded in the retrieved chunks, and information that is fabricated or speculative.

#How To Leverage LLM-Based Evaluations in Practice

Now that we have introduced the concept of LLM-based evaluations and seen how faithfulness can be used to evaluate an AI's response, let's explore how these evaluations can be leveraged in practice to enhance the performance, reliability, and user experience of AI systems.

#1. 📊 On-the-Fly Metrics for Enhanced Decision-Making

You can present LLM evaluation metrics alongside AI-generated responses as real-time indicators of response quality. This provides transparency and allows users to make informed decisions about the information they receive. Here are some examples of how you could implement these metrics:

-

Faithfulness Indicators: Show how well the response aligns with source material:

- 🟢 High (> 0.8): Claims are well-supported by sources

- 🟡 Medium (0.5 - 0.8): Some claims need verification

- 🔴 Low (< 0.5): Multiple unsupported claims detected

-

Display Metrics: Embed context precision and faithfulness metrics directly into your interface. This helps users understand:

- Whether the right information was retrieved

- Whether the response is trustworthy and reliable

-

Guided Actions Based on Metrics: These metrics enable proactive decision-making, such as deciding whether to rely on an AI-generated response or seek validation through alternative sources or manual review:

Score Range Recommended Actions > 0.8 Proceed with confidence 0.5 - 0.8 Review key claims and sources < 0.5 Request new response, update query or consult alternative sources

#2. 📈 LLMOps: System Monitoring and Optimization

LLM-based evaluations can serve as an integral part of system monitoring pipelines, helping to ensure long-term AI performance persistence. Here are some examples of how you can use these metrics to monitor and optimize your AI system:

-

Pipeline Optimization: Optimize your AI pipeline by adjusting the retrieval and generation strategies to maximize faithfulness, answer relevancy and context precision.

-

Performance Dashboards and Anomaly Detection: Continuously record LLM-based metrics in a dashboard and establish baseline values. Use these baselines to detect anomalies in performance, such as unexpected drops in context precision, which may indicate errors in the retrieval process or document parsing failures.

-

Automatic Feedback Loops: Implement automated retraining or adjustment mechanisms. If the evaluation scores consistently indicate poor faithfulness, the system could automatically refine its retrieval or generation steps by incorporating better filtering strategies or using more reliable sources of context.

#3. 🔮 Agentic AI Workflows for Intelligent Automation

LLM evaluations can play a crucial role in agentic AI workflows, guiding agents to dynamically choose actions rather than relying on static rules.

-

Adaptive Retrieval Actions:

In RAG systems, if a response's context precision is low, an agent can automatically decide to search the web for additional sources or query other internal databases to supplement the missing context. Check out our Corrective RAG pipeline to see this in action. -

Interactive User Engagement:

An agent could detect low answer relevancy in generated responses and decide to ask clarifying questions to gather more specific user input, ensuring more accurate and actionable answers. -

Confidence-Driven Actions:

In applications like customer support or finance advisory, an agent can compare evaluation metrics and decide whether to present the response, request validation, or escalate the query to a human expert.

#Case Study Part 3: Optimizing Our RAG Pipeline

To illustrate the benefits that can be obtained from using LLM-based evaluations, we optimized the RAG pipeline in our case study on the Finance and Prosperity 2024 report, focusing on improving the faithfulness of its responses.

#Initial Evaluation

To evaluate the pipeline, we generated ~70 example user queries using OpenAI's

gpt-4o model with the document as context. We instructed the model to generate

queries that are:

- Directly relevant and included in the document

- Related, but not present in the documents

- Unrelated to the document

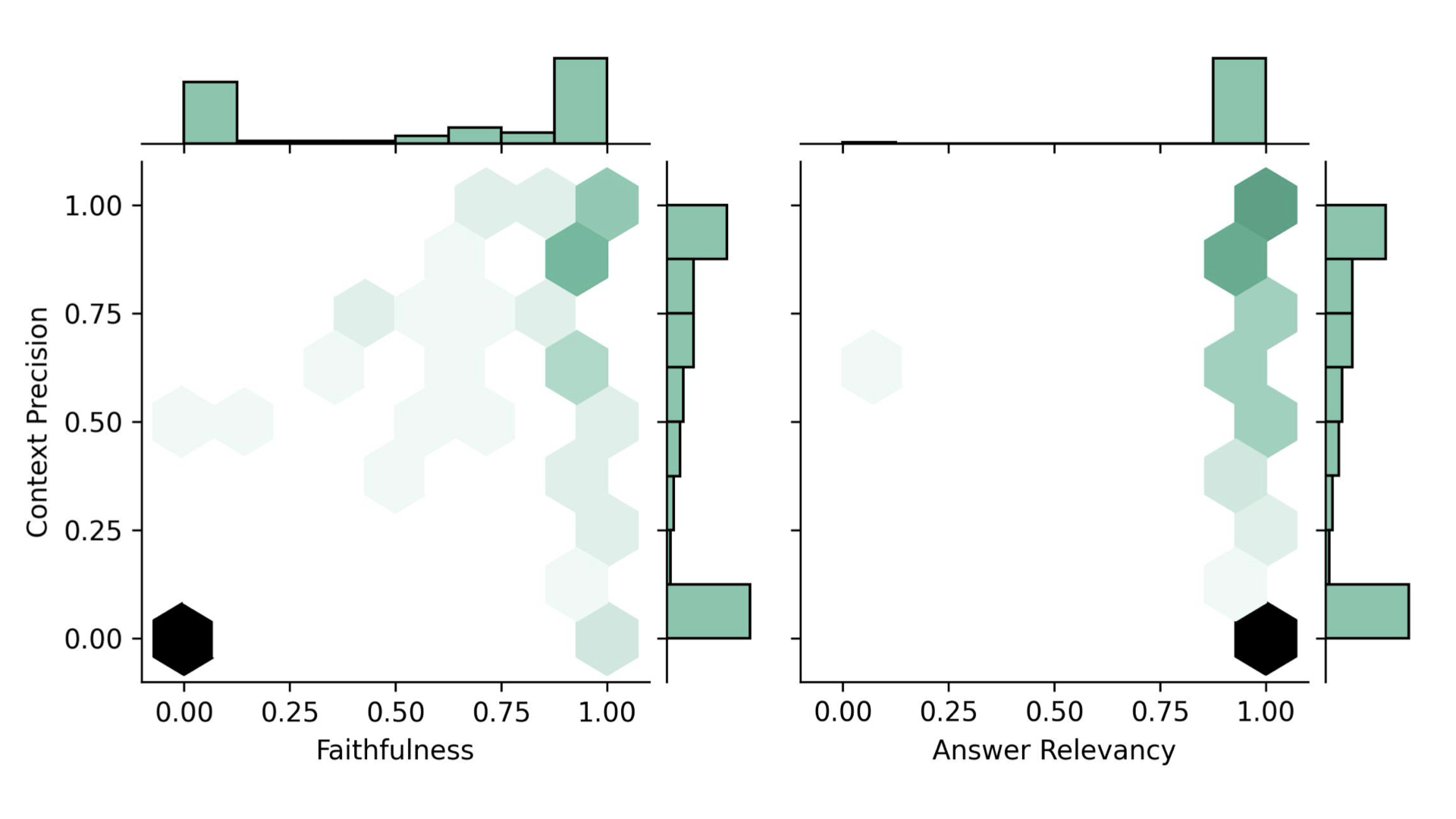

We then customized our initial RAG pipeline with LLM components that evaluate context precision, answer relevancy and faithfulness. The pipeline was then executed for each query, with the evaluation metrics recorded. The mean values for each metric are reported below:

- Mean Context Precision: 0.47

- Mean Answer Relevancy: 0.99

- Mean Faithfulness: 0.58

Alarm bells should be ringing at this point. First, the Mean Answer Relevancy is suspiciously high, especially given that I am using some queries that are deliberately unrelated to the document. Second, our responses are, on average, only 58% faithful to the retrieved context.

Please see the visualization of the distributions and relationships between these metrics below.

Looking at these plots, we can gain an understanding of how our system is currently behaving:

- The left-most plot shows a very high-density dark region where context precision and faithfulness are both ~0. In other words, this is where the pipeline is unable to retrieve relevant information from the document, so it hallucinates responses.

- The right-most plot paints a similar picture, demonstrating that answer relevancy and context precision are almost entirely uncorrelated. This means that the system delivers highly relevant answers, even when the retrieved context is not relevant. This is a bad sign, as it again indicates that the system is hallucinating in its responses.

Based on these findings, even in the absence of any ground truth labels, we can see that the pipeline is hallucinating in its responses when it is unable to retrieve relevant information from the document.

#Pipeline Optimization

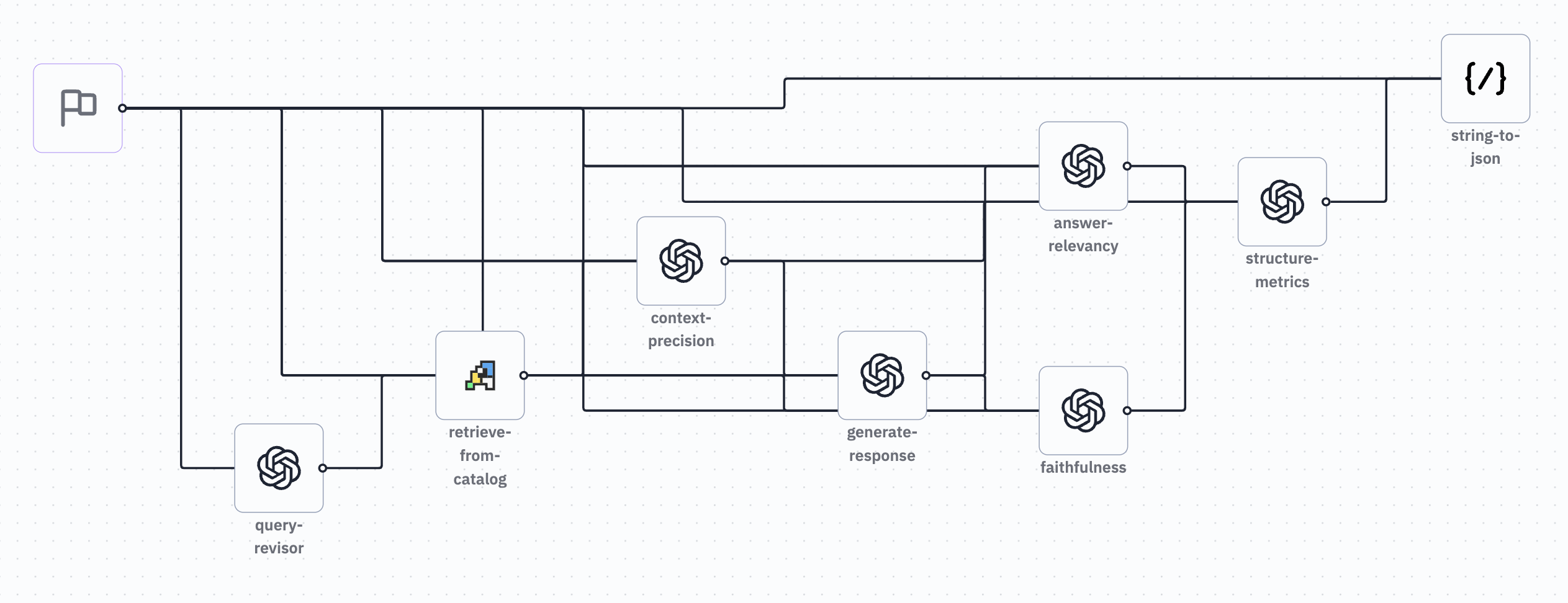

To improve the pipeline, we took the following steps:

- Query Revisor: We created a custom component that revises the user query into a more suitable search query to retrieve relevant information from the document.

- Prompt Optimization: The prompt and system prompt for the LLM that generates the response were updated with much more explicit instructions to be faithful to the retrieved context.

To give you a sense of what the pipeline looks like, the pipeline preview of the optimized RAG pipeline with LLM-based evaluations is shown below:

#Evaluating the Optimized Pipeline

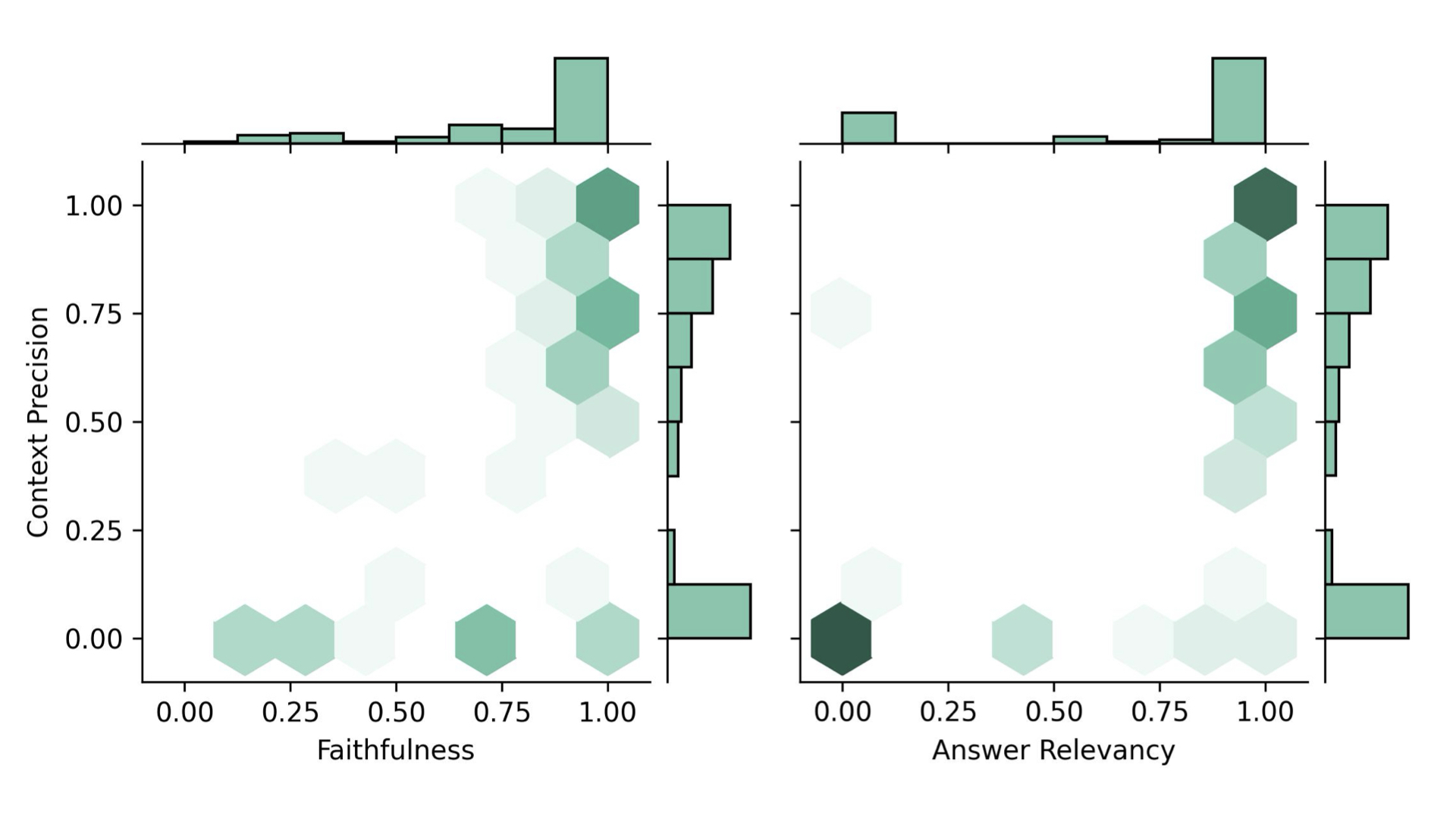

We then executed the pipeline for the same generated queries as before, again recording the evaluation metrics. The mean values for each metric are reported below:

- Mean Context Precision: 0.50 (+0.03)

- Mean Answer Relevancy: 0.72 (-0.27)

- Mean Faithfulness: 0.80 (+0.22)

While mean answer relevancy has decreased, mean faithfulness has increased, indicating that the number of hallucinations has been reduced 🎉!

Let's now visualize the distributions and relationships of these metrics.

These plots now paint a much more positive picture:

- On the left-most plot, the high-density region where context precision and faithfulness were both ~0 has been eradicated. While context precision is roughly the same, this suggests that the pipeline response is now much more faithful to the retrieved context.

- The right-most plot now shows a clear relationship between answer relevancy

and context precision. We can observe two distinct high-density regions:

- The top-right region where both answer relevancy and context precision are high: relevant information can be retrieved and so a highly relevant answer is given.

- The bottom-left region where both answer relevancy and context precision are low: relevant information cannot be retrieved (probably because the query is out of scope) and so a less relevant answer is given.

By leveraging LLM-based evaluations, we enhanced the RAG system's ability to produce more trustworthy and actionable insights, demonstrating how these metrics can significantly improve system outcomes.

It's worth noting that many additional improvements can of course be made to this pipeline to optimize or tailor it to suit your requirements. For instance, you could:

- Use a more sophisticated query revisor component such as Hypothetical Document Embedding (HyDE).

- Further refine the prompt for the LLM that generates the response.

- Combine the retrieval phase with web search capabilities to retrieve more. relevant information where this information is not present in the document (e.g. Corrective RAG).

- Refine the chunking strategy to allow for more precise retrieval of relevant information.

More content coming soon on how to further optimize RAG applications.

#Conclusion

LLM-based evaluations represent a crucial advancement in building reliable AI systems, particularly in scenarios where ground truth labelled datasets may be unavailable or impractical. By leveraging LLM-based evaluations, we move closer to a future where AI systems are not only powerful but also reliable and transparent.

You will now have a good understanding of what LLM-based evaluations are and how they can be used in practice to improve the reliability, performance, and user experience of your AI applications.

Through our case study on the Finance and Prosperity 2024 report, we've

outlined how metrics like Faithfulness,

Context Precision, and Answer Relevancy can:

- Diagnose and characterize the behavior of your AI system

- Identify and reduce hallucinations in AI-generated responses

- Enable real-time quality assessment without requiring labeled datasets

The practical applications of these evaluation techniques extend across various domains:

- Financial Services: Ensure accurate analysis of market reports and regulatory documents

- Healthcare: Validate medical information extraction and summarization

- Legal: Verify faithful interpretation of legal documents and precedents

- Research & Development: Support reliable knowledge extraction from technical documentation

#Next Steps

Want to learn more about how Instill AI can help you build reliable AI systems? Book a call with our team to discuss your use case and how we can help you.

Ready to enhance the reliability of your AI systems? 👉 Sign Up to Instill Cloud and start building trustworthy AI applications today - for free!

Want to delve into the technical details behind this blog post? 👉 Check out our LLM-based Evaluations cookbook.